Data Platform

« Analyze, explore, decide, activate »

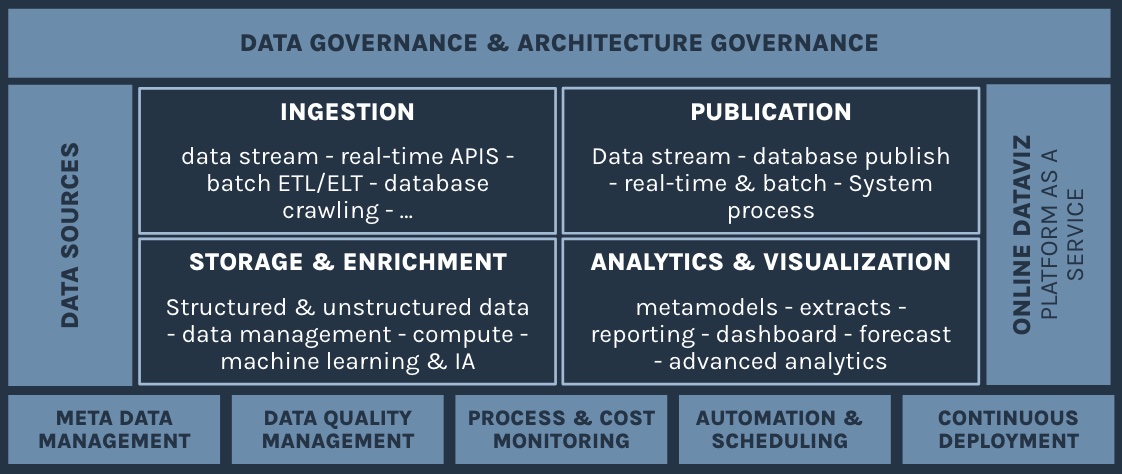

- Transitioning from old-school business intelligence to Data Platforms

- Gathering and organizing all structured and unstructured company data

- Accelerating AI-assisted decision-making

- Empowering business teams to analyze their data in a free and exploratory manner

- Re-injecting enriched data from the data platform into operational IT components

- Support and implementation of a data platform

- Solutions and architecture selection

- Organization and governance

- Data mesh, data product, data catalog, data platform as a service approach

- Data processing support (dataviz, reporting, reverse ETL,…)

- Monitoring and optimizing the data platform

Organization and governance

Data governance is a set of processes, policies, and controls to ensure the quality, confidentiality, security, and compliance of data within an organization. This includes actions like defining responsibilities, setting standards and rules, managing access, documenting metadata, data flows management, and more.

When we talk about the combination of data platform and data governance, we refer to the integration of governance practices in the design, implementation and use of the data platform, particularly on the topics of data security, data quality, regulatory compliance and metadata

By integrating data governance into the data platform, the organizations can better control and manage their data, while making the most of its analytical potential. It also helps ensure that data is used responsibly and in compliance with regulatory requirements.

Data catalog

Data cataloging is the process of creating, maintaining, and managing a centralized catalog of all the data available within an organization. This catalog acts as a kind of repository or directory for all the information and data stored in various systems, databases, and other sources within the company. Among other things, it relies on metadata management and centralization tools to organize, find and manage a large volume of data (files, tables, ,..): single location, complete 360° view of databases, location, profile, statistics, comments, summaries,…

The main purpose of data cataloging is to make it easy for users to find, access, and understand the data they need for their business activities or analytics. The data catalog provides metadata describing the data, such as its origin, structure, quality, privacy, ownership, and so on. This helps ensure that data is used efficiently, securely, and in compliance with regulations.

Data mesh / data domains / data product

The logics of a DataMesh approach operate a paradigm shift from traditional approaches to centralized data management.

This includes a number of concepts such as:

– Data Mesh: Decentralizing data management within an organization by adopting a business domain-based approach. Instead of having a central data management team, each business area is responsible for its own data, its quality, governance, and usage.

– Data Domains: Data domains represent the different functional or business areas within an organization. Each data domain is responsible for collecting, storing, processing, and analyzing data specific to that domain. This approach fosters accountability and expertise for data at the local level, while allowing for better collaboration between teams.

– Data Products: Data products are data sets that are organized, managed, and made available as value-added end products to end users. These data products can take different forms, such as reports, dashboards, APIs, out-of-the-box datasets, and more. Each data domain is responsible for creating and managing its own data products to meet specific business needs.

Data collection and integration

– Data collection: Data collection is the process of gathering data from different sources, internal or external to the organization. These sources can include databases, flat files, IoT sensors, external APIs, social media, mobile devices, and more. The challenge is to automate this collection using various tools and technologies, according to the specific needs of the organization (stream, real-time, asynchronous flows, etc.)

– Data integration: once the data has been collected, it is a matter of integrating and consolidating it to form a coherent, unified and usable collection! Data integration involves transforming, cleansing, and enriching data to ensure data quality and consistency. This requires choosing and implementing the right technical solutions ranging from traditional ETL (or today more frequently ELT) to streaming data pipelines, etc.

Modeling, Enrichment, AI

Data modeling is the process of designing structures and schemas that represent data in a way that is understandable and usable to users and applications.

Data enrichment is the process of improving the quality, relevance, and value of data by adding additional information from different sources. This can include metadata, contextual information, geographic data, demographics, and more. Data enrichment can also involve processes such as normalizing, deduplicating, validating, and correcting data to ensure data quality and accuracy.

By combining data modeling, data enrichment, and artificial intelligence, organizations can make the most of their data to gain actionable insights, make better informed decisions, and create business value.

Data Processing and Activation (dataviz, reverse ETL)

Datavisualization aims to make complex data more understandable and meaningful by presenting it visually, through graphs, tables, maps, diagrams and other visual elements.

It aims to :

– making data easier to understand, speeding up analysis and decision-making.

– identifying trends, correlations and patterns that may not be apparent in the raw data.

– effective communication of insights and results to stakeholders.

– interactive exploration to delve deeper into the data and uncover additional insights.

There are many data visualization solutions, each tailored to specific data types and analysis objectives.

The operational activation of data from a data platform increasingly involves “reverse ETL”, a relatively new concept which enables data already residing in a data platform to be taken and reinjected into operational systems or business applications.

Reverse ETL has become relevant for bringing this data back into their applications and operational systems to be used proactively, for example to personalize the user experience, feed real-time dashboards, trigger automated actions and so on.